鸢尾花分类 iris 数据集包含 150 个样本,对应数据集的每行数据。每行数据包含每个样本的四个特征和样本的类别信息,iris 数据集是用来给鸢尾花做分类的数据集,每个样本包含了花萼长度、花萼宽度、花瓣长度、花瓣宽度四个特征,用神经网络训练一个分类器,分类器可以通过样本的四个特征来判断样本属于山鸢尾、变色鸢尾还是维吉尼亚鸢尾。

导包 1 2 3 4 5 6 7 8 import torchimport torch.nn as nnimport torch.nn.functional as Fimport numpy as npimport pandas as pdfrom torch.utils.data import Dataset, DataLoader, TensorDatasetfrom sklearn.utils import shuffleimport matplotlib.pyplot as plt

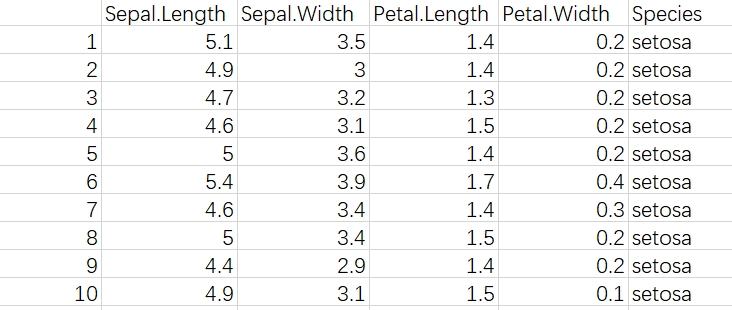

数据形式

数据预处理

data = data.drop('Unnamed: 0', axis=1):

删除数据框中名为 ‘Unnamed: 0’ 的列。这通常是由于在读取 CSV 文件时包含了索引列,而这里选择删除该列。

axis=1表示对列进行操作,axis=0表示对行进行操作

data = shuffle(data):

对数据进行洗牌(随机重排)。这样做的目的是为了打乱数据的顺序,以便更好地训练机器学习模型。

data.index = range(len(data)):

重新设置数据框的索引,确保索引从零开始、连续递增。这是为了保持数据的整洁性。

data.loc[i, ‘Species’]:

1 2 3 4 5 6 7 8 9 10 11 12 data = pd.read_csv('iris.csv' ) for i in range (len (data)): if data.loc[i, 'Species' ] == 'setosa' : data.loc[i, 'Species' ] = 0 if data.loc[i, 'Species' ] == 'versicolor' : data.loc[i, 'Species' ] = 1 if data.loc[i, 'Species' ] == 'virginica' : data.loc[i, 'Species' ] = 2 data = data.drop('Unnamed: 0' , axis=1 ) data = shuffle(data) data.index = range (len (data))

数据标准化 1 2 3 4 col_titles = ['Sepal.Length' , 'Sepal.Width' , 'Petal.Length' , 'Petal.Width' ] for i in col_titles: mean, std = data[i].mean(), data[i].std() data[i] = (data[i] - mean) / std

数据集处理

torch.from_numpy(train_x): 这一部分使用 PyTorch 中的 torch.from_numpy 函数,将 NumPy 数组 train_x 转换为 PyTorch 的张量。.type(torch.FloatTensor): 这一部分将前一步得到的张量的数据类型转换为浮点数类型(torch.FloatTensor)。这是为了确保张量中的元素都是浮点数类型,因为神经网络通常要求输入数据为浮点型。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 train_data = data[:32 ] train_x = train_data.drop(['Species' ], axis=1 ).values train_y = train_data['Species' ].values.astype(int ) train_x = torch.from_numpy(train_x).type (torch.FloatTensor) train_y = torch.from_numpy(train_y).type (torch.LongTensor) test_data = data[-32 :] test_x = test_data.drop(['Species' ], axis=1 ).values test_y = test_data['Species' ].values.astype(int ) test_x = torch.from_numpy(test_x).type (torch.FloatTensor) test_y = torch.from_numpy(test_y).type (torch.LongTensor) train_dataset = TensorDataset(train_x, train_y) test_dataset = TensorDataset(test_x, test_y) train_loader = DataLoader(dataset=train_dataset, batch_size=16 , shuffle=True ) test_loader = DataLoader(dataset=test_dataset, batch_size=8 , shuffle=True )

构建网络 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 class Model (nn.Module): def __init__ (self ): super ().__init__() self.hidden1 = nn.Linear(4 , 10 ) self.out = nn.Linear(10 , 3 ) def forward (self, x ): x = self.hidden1(x) x = F.relu(x) x = self.out(x) return x net = Model() loss_fn = nn.CrossEntropyLoss() opt = torch.optim.SGD(net.parameters(), lr=0.001 )

训练 1 2 3 4 5 6 7 8 9 10 11 12 for epoch in range (10000 ): for i, data in enumerate (train_loader): x, y = data pred = net(x) loss = loss_fn(pred, y) opt.zero_grad() loss.backward() opt.step() if epoch % 1000 == 0 : print (loss)

训练期间损失输出: tensor(0.9600, grad_fn=<NllLossBackward0>)

tensor(0.6445, grad_fn=<NllLossBackward0>)

tensor(0.5229, grad_fn=<NllLossBackward0>)

tensor(0.4812, grad_fn=<NllLossBackward0>)

tensor(0.2039, grad_fn=<NllLossBackward0>)

tensor(0.3655, grad_fn=<NllLossBackward0>)

tensor(0.3316, grad_fn=<NllLossBackward0>)

tensor(0.2709, grad_fn=<NllLossBackward0>)

tensor(0.2131, grad_fn=<NllLossBackward0>)

tensor(0.1387, grad_fn=<NllLossBackward0>)

测试 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 def rightness (_pred, _y ): _pred = torch.max (_pred.data, 1 )[1 ] _rights = _pred.eq(_y.data.view_as(_pred)).sum () return _rights, len (_y) rights = 0 length = 0 for i, data in enumerate (test_loader): x, y = data pred = net(x) rights += rightness(pred, y)[0 ] length += rightness(pred, y)[1 ] print (y) print (torch.max (pred.data, 1 )[1 ], '\n' ) print (rights, length, rights / length)

准确率: tensor([0, 2, 0, 1, 2, 0, 2, 2])

tensor([0, 2, 0, 1, 2, 0, 2, 2])

tensor([2, 2, 0, 2, 0, 0, 2, 2])

tensor([2, 2, 0, 2, 0, 0, 2, 2])

tensor([0, 1, 0, 0, 0, 2, 2, 2])

tensor([0, 1, 0, 0, 0, 2, 2, 2])

tensor([2, 2, 2, 1, 0, 0, 1, 2])

tensor([2, 2, 2, 1, 0, 0, 1, 2])

tensor(32) 32 tensor(1.)